Posts: 1,272

Threads: 119

Joined: Apr 2022

Reputation:

100

(03-27-2024, 01:47 PM)Dimster Wrote: Steve - I'm not sure how to measure the speed of the method I'm using to calculate the size of an array and then dimension it. I have a number of files with data that are grow in size over time. The method I'm using is to simply count the number of data items in the file and then create the array.

for example

Code: (Select All) t# = Timer(0.001)

count = 0

For c = 1 To limit: count = count + 1: Next

Dim Shared foo(count)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it."; UBound(foo), t1# - t#

Where is t1#?

New to QB64pe? Visit the QB64 tutorial to get started.

QB64 Tutorial

Posts: 382

Threads: 56

Joined: Apr 2022

Reputation:

13

My apologies Terence. I meant the code to be an addition to Steve's

Code: (Select All) ReDim foo(0) As Long 'the array that's going to hold an uncertain amount of data

Randomize Timer

limit = Rnd * 1000000 + 1000000 'now, nobody knows how large this limit is going to be. Right?

'Let's run some tests with various ways to fill an array with this uncertain amount of data

t# = Timer(0.001)

count = 0

Do

foo(count) = count

If count < limit Then

count = count + 1

ReDim _Preserve foo(count)

Else

Exit Do

End If

Loop

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it."; UBound(foo), t1# - t#

'Now, let's clear all that data and try a different method

ReDim foo(10000000) As Long 'big enough to hold the data, no matter what

t# = Timer(0.001)

count = 0 'reset the counter

Do

foo(count) = count

If count < limit Then

count = count + 1

Else

Exit Do

End If

Loop

ReDim _Preserve foo(count)

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it."; UBound(foo), t1# - t#

t# = Timer(0.001)

count = 0

For c = 1 To limit: count = count + 1: Next

Dim Shared foo(count)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it."; UBound(foo), t1# - t#

Posts: 2,696

Threads: 327

Joined: Apr 2022

Reputation:

217

@Dimster You'd have to count yours by having it time to whole process -- the file access once for counting the file and the second time for dimming and loading it... and that's going to vary a LOT based on where the file is located and how you're accessing it.

Load the file once into memory, parse it from there to count it, and then load it? That's not going to be so bad speed-wise, but it's going to use up the most memory possible as it'll have 2 copies of the same data in memory until the original, unparsed data, is freed.

Access the file from a normal drive, load it line by line for a item count, dim the array that size, and then load the data? Going to be slower, with your speeds variable according to whatever your drive speeds are. RAM drive? Speedy! SSD Drive? A little slower. A 9800RPM SATA quad-drive? Fast as heck... well, maybe... how's that SATA configured? Speed, size, or security? An old 1980 1600RPM drive? Slow...

Access that file via a network drive, load it line by line for an item count, dim the array that size, and then load the data? Take a nap if that file is any size at all!! Network transfer rates and data validity checks and everything else has to be performed TWICE -- line by line -- as it transfers that file! There's some posts on here about saving .BAS files across network drives -- and them only taking 15 minutes or so to save line-by-line!! The method you suggest is definitely NOT one I'd even want to consider for network usages.

So, in all honestly, it'd be hard to get a true reading of just how much of a difference it would make overall, as you could only really rest for your own system's data setup. To give an idea though of which method has to be faster, just look at what you're doing:

Method 1: Open file. Read file line by line to get number of items in the file. Close file. Open file. Dim array to that size. Read file line by line to get those items into the array. Close file.

vs.

Method 2: Dim oversized array. Open file. Read file line by line directly into array. Close file. Resize array to proper size.

One has to read the whole data file twice. The other only reads it once. Now, the difference in performance here is going to be mainly based on how fast your system transfers that data -- the physical access speed is going to be more than anything going on with the memory manipulation in this case.

Posts: 2,696

Threads: 327

Joined: Apr 2022

Reputation:

217

To give you an measurable test, to have an idea of the speed difference, give this a try:

Code: (Select All)

ReDim foo(0) As Long 'the array that's going to hold an uncertain amount of data

Randomize Timer

limit = Rnd * 100000 + 100000 'now, nobody knows how large this limit is going to be. Right?

'Let's run some tests with various ways to fill an array with this uncertain amount of data

Open "temp.txt" For Output As #1 'write the data to disk to see how that affects things.

For i = 1 To limit

Print #1, i

Next

Close

Open "temp.txt" For Binary As #1

t# = Timer(0.001)

count = 0

Do

Line Input #1, temp$

foo(count) = Val(temp$)

count = count + 1

ReDim _Preserve foo(count) 'resize as we go

Loop Until EOF(1)

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it. (ReDim as we go)"; UBound(foo), t1# - t#

'Now, let's clear all that data and try a different method

ReDim foo(10000000) As Long 'big enough to hold the data, no matter what

t# = Timer(0.001)

count = 0 'reset the counter

Seek #1, 1 'go back to the beginning of the file

Do

Line Input #1, temp$

foo(count) = Val(temp$)

count = count + 1

Loop Until EOF(1)

ReDim _Preserve foo(count)

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it. (ReDim, then resize)"; UBound(foo), t1# - t#

'Now, let's clear all that data and try a different method

ReDim foo(0) As Long 'erase the old data

t# = Timer(0.001)

count = 0 'reset the counter

Seek #1, 1 'go back to the beginning of the file

Do

Line Input #1, temp$

count = count + 1

Loop Until EOF(1)

Seek #1, 1 'go back to the beginning of that file

ReDim _Preserve foo(count)

For i = 1 To count

Line Input #1, temp$

foo(count) = Val(temp$)

Next

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it. (Count, then ReDim)"; UBound(foo), t1# - t#

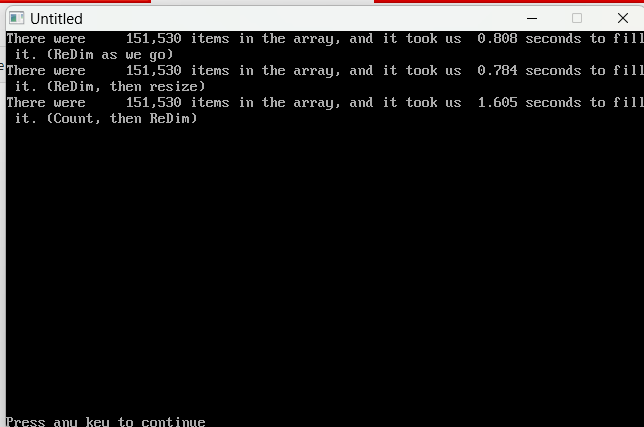

And this is the speed difference with my laptop, which is loading and saving from a SSD -- it still takes twice as long to load line-by-line and count the array, as it does with the other methods. Disk access is slow; it's the true bottleneck in performance usually, whenever you have a routine that runs it. The less reads/writes you make to your disk/data, the better off you are, performance-wise.

One thing to note here: I reduced my test size by a factor of 10 for this. Instead of testing millions of items, we're just testing a few hundred thousand -- that's the difference in how much disk access affects the speed of a routine.

Posts: 382

Threads: 56

Joined: Apr 2022

Reputation:

13

Thanks Steve and Delsus for the question, sorry if I may have taken off topic.

|