03-27-2024, 06:58 PM

To give you an measurable test, to have an idea of the speed difference, give this a try:

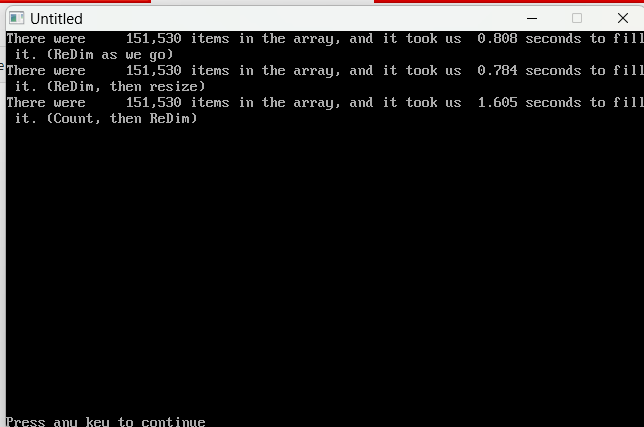

And this is the speed difference with my laptop, which is loading and saving from a SSD -- it still takes twice as long to load line-by-line and count the array, as it does with the other methods. Disk access is slow; it's the true bottleneck in performance usually, whenever you have a routine that runs it. The less reads/writes you make to your disk/data, the better off you are, performance-wise.

One thing to note here: I reduced my test size by a factor of 10 for this. Instead of testing millions of items, we're just testing a few hundred thousand -- that's the difference in how much disk access affects the speed of a routine.

Code: (Select All)

ReDim foo(0) As Long 'the array that's going to hold an uncertain amount of data

Randomize Timer

limit = Rnd * 100000 + 100000 'now, nobody knows how large this limit is going to be. Right?

'Let's run some tests with various ways to fill an array with this uncertain amount of data

Open "temp.txt" For Output As #1 'write the data to disk to see how that affects things.

For i = 1 To limit

Print #1, i

Next

Close

Open "temp.txt" For Binary As #1

t# = Timer(0.001)

count = 0

Do

Line Input #1, temp$

foo(count) = Val(temp$)

count = count + 1

ReDim _Preserve foo(count) 'resize as we go

Loop Until EOF(1)

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it. (ReDim as we go)"; UBound(foo), t1# - t#

'Now, let's clear all that data and try a different method

ReDim foo(10000000) As Long 'big enough to hold the data, no matter what

t# = Timer(0.001)

count = 0 'reset the counter

Seek #1, 1 'go back to the beginning of the file

Do

Line Input #1, temp$

foo(count) = Val(temp$)

count = count + 1

Loop Until EOF(1)

ReDim _Preserve foo(count)

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it. (ReDim, then resize)"; UBound(foo), t1# - t#

'Now, let's clear all that data and try a different method

ReDim foo(0) As Long 'erase the old data

t# = Timer(0.001)

count = 0 'reset the counter

Seek #1, 1 'go back to the beginning of the file

Do

Line Input #1, temp$

count = count + 1

Loop Until EOF(1)

Seek #1, 1 'go back to the beginning of that file

ReDim _Preserve foo(count)

For i = 1 To count

Line Input #1, temp$

foo(count) = Val(temp$)

Next

t1# = Timer(0.001)

Print Using "There were ###,###,### items in the array, and it took us ##.### seconds to fill it. (Count, then ReDim)"; UBound(foo), t1# - t#

And this is the speed difference with my laptop, which is loading and saving from a SSD -- it still takes twice as long to load line-by-line and count the array, as it does with the other methods. Disk access is slow; it's the true bottleneck in performance usually, whenever you have a routine that runs it. The less reads/writes you make to your disk/data, the better off you are, performance-wise.

One thing to note here: I reduced my test size by a factor of 10 for this. Instead of testing millions of items, we're just testing a few hundred thousand -- that's the difference in how much disk access affects the speed of a routine.